Does Wikipedia editing activity forecast Oscar wins?

The Academy Awards just concluded and much will be said about Ellen Degeneres most retweeted tweet (my coauthors and I have posted an analysis here that shows these “shared media” or “livetweeting” events disproportionately award attention to already elite users on Twitter.) I thought I’d use the time to try to debug some code I’m using to retrieve editing activity information from Wikipedia.

A naive but simple theory I wanted to test was whether editing activity could reliably forecast Oscar wins. Academy Awards are selected from approximately 6,000 ballots and the process is known for intensive lobbying campaigns to sway voters as well as tapping into the zeitgeist about larger social and cultural issues.

I assume that some of this lobbying and zeitgeist activity would both manifest in the aggregate in edits to the English Wikipedia articles about the nominees. In particular, I measure two quantities: (1) the changes (revisions) made to the article and (2) the number of new editors making revisions. The hypothesis is simply that articles about nominees with the most revisions and the most new editors should win. I look specifically at the time between announcement of the nominees in early January and March 1 (an arbitrary cutoff).

I’ve only run the analysis on the nominees for Best Picture, Best Director, Best Actor, Best Actress, and Best Supporting Actress (nominees in Best Supporting Actor was throwing some unusual errors, but I’ll update). The results below show that Wikipedia editing activity forecast the wins in Best Actor, Best Actress, and Best Supporting Actress, but did not do so for Best Picture or Best Director. This is certainly better than chance and I look forward to expanding the analysis to other categories and prior years.

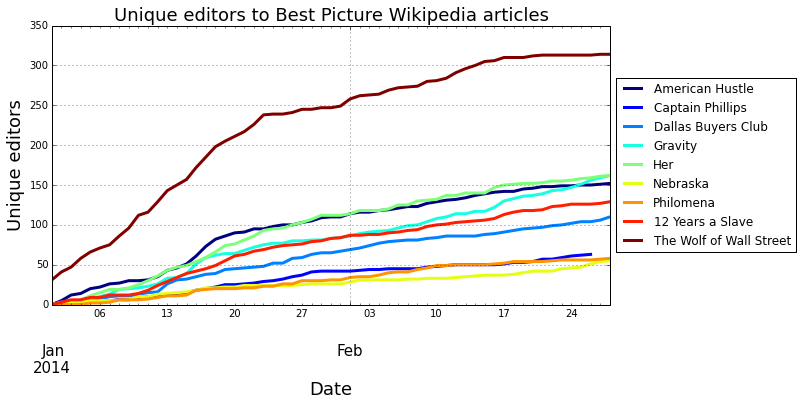

Best Picture

The “Wolf of Wall Street” showed a remarkably strong growth in the number of new edits and editors after January 1. However, “12 Years a Slave” which ranked 5th by the end, actually won the award. A big miss.

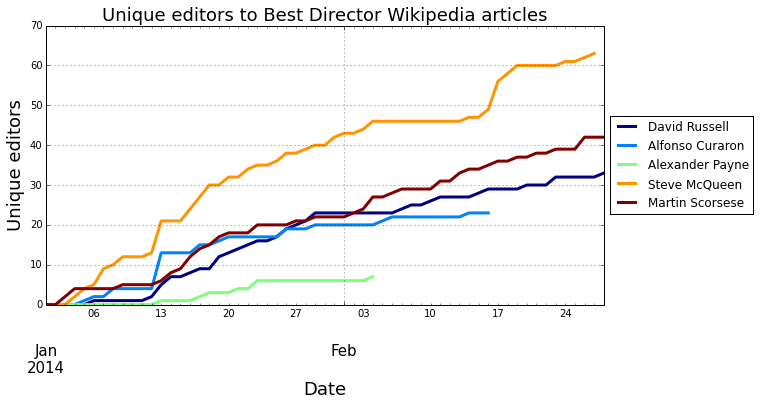

Best Director

The Wikipedia activity here showed strong growth for Steve McQueen (“12 Years a Slave”), but Alfonso Cuaron (“Gravity”) took the award despite coming in 4th in both metrics here. Another big miss.

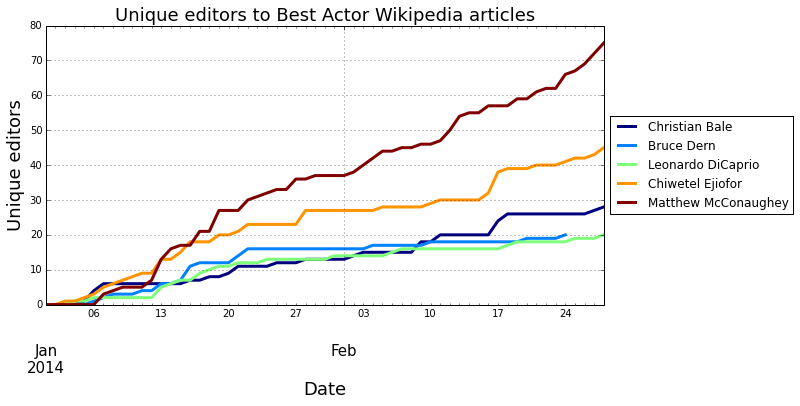

Best Actor

The Wikipedia activity for new edits and new editors are highly correlated because new editors necessarily show up as new edits. However, we see an interesting and very close race here between Chiwetel Ejofer (“12 Years a Slave”) and Matthew McConaughey (“Dallas Buyers Club”) for edits, but McConaughey with a stronger leader among new editors. This suggest older editors were responsible for pushing Ejofer higher (and he was leading early on), but McConaughey took the lead and ultimately won. Wikipedia won this one.

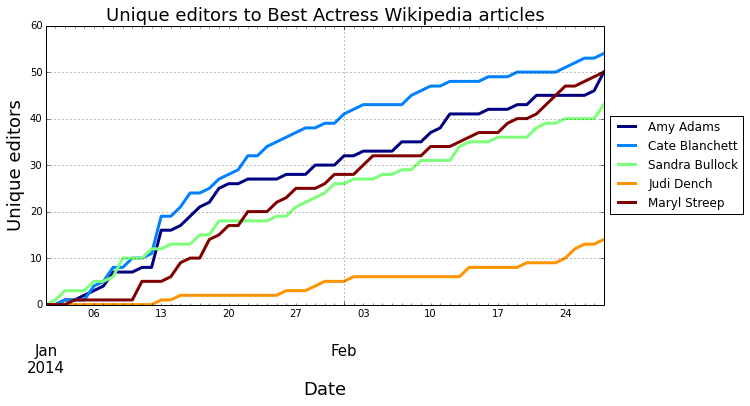

Best Actress

Poor Judy Dench, she appeared to not even be in the running in either metric. Wikipedia activity forecast a Cate Blanchett (“Blue Jasmine”) win, although this appeared to be close among several candidates if the construct is to be believed. Wikipedia won this one.

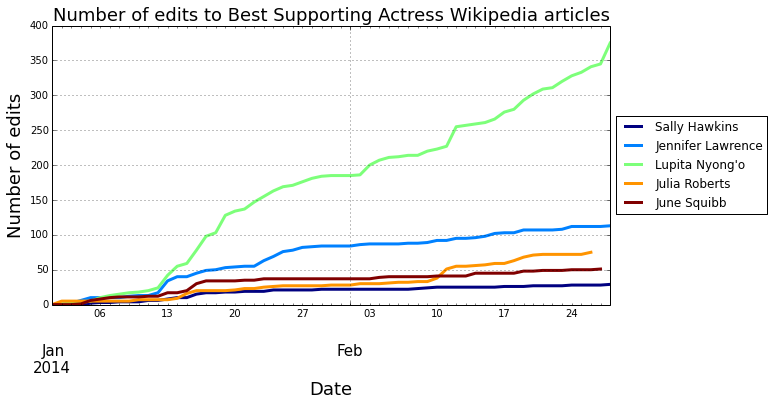

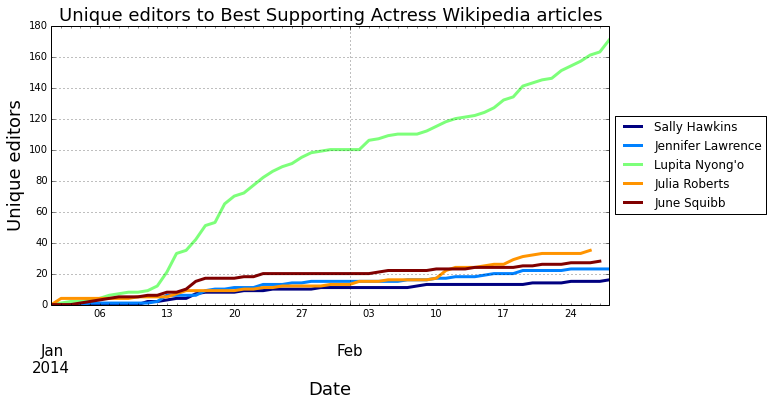

Best Supporting Actress

Lupita Nyong’o (“12 Years a Slave”) accumulated a huge lead over her other nominees by Wikipedia activity and won the award.

Other Categories and Future Work

I wasn’t able to run the analysis for Supporting Actor because the Wikipedia API seemed to poop out on Bradley Cooper queries, but it may be a deeper bug in my code too. This analysis can certainly be extended to the “non-marquee” nominee categories as well, but I didn’t feel like typing that much.

I will extend and expand this analysis for both other categories as well as prior years’ awards to see if there are any discernible patterns for forecasting. There may be considerable variance between categories in the reliability of this simple heuristic — Director and Picture may be more politicized than the rest, if I wanted to defend my simplistic model. This type of approach might also be used to compare different awards shows to see if some diverge more than others from aggregate Wikipedia preferences. The hypothesis here is a simple descriptive heuristic and more extensive statistical models that incorporate features such as revenue, critics’ scores, and nominees’ award histories (“momentum”) may produce more reliable results as well.

Conclusion

Wikipedia editing activity over the two months leading up to the 2014 Academy Awards accurately forecast the winners of the Best Actor, Best Actress, and Best Supporting Actress categories but significantly missed the winners of the Best Picture and Best Director categories. These results suggest that differences in editing behavior–in some cases–may reflect collective attention to and aggregate preferences for some nominees over others. Because Wikipedia is a major clearinghouse for individuals who both seek and shape popular perceptions, these behavioral traces may have significant implications for forecasting other types of popular preference aggregation such as elections.